Important Update to Google Analytics

According to some reports, one-third of all web traffic is from bots. Google has just made a small, but important update to Google Analytics that now offers an option to exclude traffic from known bots and spiders.

Announced on Google+ and reported by Tech Crunch, the change finally makes it easy to exclude bots and spiders from your Google Analytics. That “bogus” traffic can easily skew your data and lead to misunderstanding how your website is really performing.

As Tech Crunch puts it,

As Tech Crunch puts it,

Depending on your site, you may see some of your traffic numbers drop a bit. That’s to be expected, though, and the new number should be somewhat closer to reality than your previous ones. Chances are good that it’ll still include fake traffic, but at least it won’t count hits to your site from friendly bots.

Once you have opted in to excluding this kind of traffic, Analytics will automatically start filtering your data by comparing hits to your site with that of known User Agents on the IAB’s “International Spiders & Bots list” which is updated monthly.

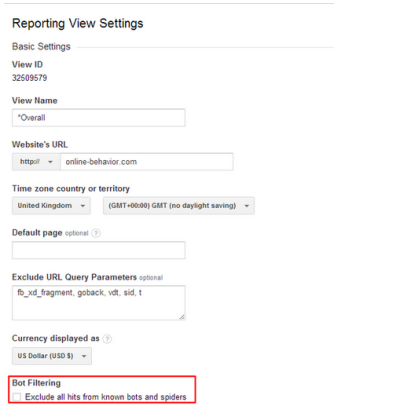

Until now, filtering this kind of traffic out was a manual and highly imprecise job. All it takes now is a trip into Analytics’ reporting view settings to enable this feature and you’re good to go.

Get the Email

Join 1000+ other subscribers. Only 1 digest email per month. We'll never share your address. Unsubscribe anytime. It won't hurt our feelings (much).

Discussion

Comments are now closed.